A special report by Aishwarya Mahesh, Energy & Environmental Sales Analyst with the ADMIS Houston division.

Power Systems

Every time an AI model parses terabytes of data for insights or answers millions of user queries in seconds, it relies on a vast, hidden reservoir of computational power. Despite improvements in the technology’s efficiency in the future, its power demand will only increase over time. As AI transforms industries and reshapes our digital landscape, one question looms large: will our power systems adapt to sustain this AI revolution?

The Growing Energy Crisis

A single query to ChatGPT consumes about 2.9 watt-hours (Wh) of energy, nearly ten times that of a typical Google search, which uses around 0.3 Wh. Multiply that by billions of queries daily, and the total daily energy consumption rivals that of a small city’s entire electricity use.

Beyond the cumulative energy, there’s also the issue of power: the capacity needed at any moment to meet this demand. For example, in Texas, a major hub for digital infrastructure, peak power demand is projected to reach 152 gigawatts (GW) by 2030, almost double the grid’s current capacity. That’s like trying to power every home in Texas and California on the hottest day of the year — Twice. While such peaks are rare, the grid has a responsibility to ensure it can handle them.

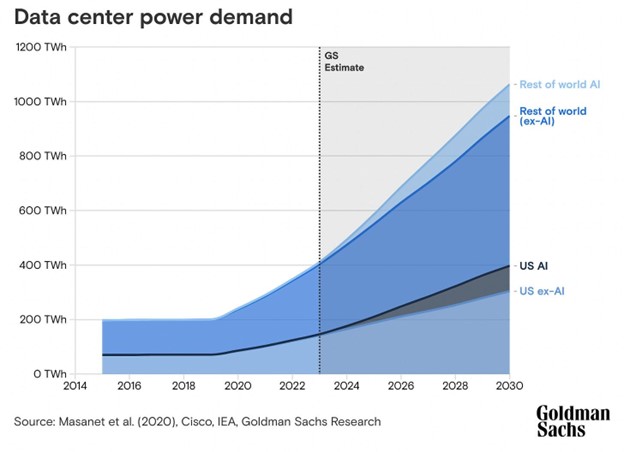

This outpacing of energy supply by demand is occurring across the US. John Ketchum, CEO of NextEra Energy, estimates that U.S. power demand will grow by 40% over the next two decades, compared to just 9% growth in the past 20 years. And the global picture is just as stark: data centers currently consume 1-2% of global electricity, a figure expected to soar. By 2034, global energy consumption by data centers is projected to exceed 1,580 TWh annually, equivalent to the total electricity used by all of India in a single year.

Unaccounted for in these estimates is the accelerating pace of AI innovation. Emerging reasoning models, such as OpenAI’s o3, require significantly more energy during inference as they process queries through step-by-step reasoning, mimicking human problem-solving. This iterative approach introduces a new tier of computational intensity, making inference increasingly expensive and marking a notable shift from traditional models, where training was the primary driver of energy consumption. Beyond these technical advancements, broader consumer adoption is set to amplify energy demand even further. From AI-powered assistants to autonomous vehicles and robotics, the integration of intelligent systems into everyday life will magnify their global energy footprint, pushing infrastructure to its limits.

The Supply Side Dilemma

The pressure of surging demand is exposing a critical shortcoming: AI’s exponential growth depends on infrastructure built for an entirely different era. Every server rack, cooling system, and algorithmic breakthrough relies on a grid that hasn’t undergone a transformation of this scale since World War II.

Once heralded as a marvel of engineering, today’s grid operates on borrowed time. Substations and transformers, originally built with a 50-year lifespan, are now approaching or exceeding 90 years in some regions. In critical areas like PJM[1], two-thirds of grid assets are more than 40 years old, creating vulnerabilities that ripple across the energy supply chain. Winter Storm Elliott in 2022 exposed this fragility, as millions lost power and systems like PJM strained to their limits. Similarly, the 2021 Texas winter storm left 4.5 million homes and businesses in the dark, not just from fuel shortages but from cascading equipment failures that also impacted critical data centers. These crises underscore a harsh reality. We are building AI systems for the future on power systems from the past.

This fragility is further compounded by the limitations of renewable energy sources like wind and solar, which, while expanding rapidly, remain intermittent and unreliable to power AI Infrastructure. Renewable energy depends on weather patterns, leaving grids vulnerable during peak demand. In 2020, California faced rolling blackouts during a heatwave as solar generation dropped in the evening, illustrating the Duck Curve[2], a sharp mismatch between midday energy generation and evening demand surges. Large-scale energy storage solutions, like grid-scale batteries, have shown promise but are not yet scalable enough to smooth out these fluctuations. This intermittency, coupled with the slow adoption of storage technologies, leaves renewables struggling to bridge the imbalance between supply and AI-driven demand.

Other energy sources, such as nuclear and geothermal, offer consistency but come with significant lead times. Building a nuclear power plant, for example, can take decades, while geothermal energy remains constrained by geographic limitations. These technologies hold promise for the long term but cannot address the immediate[3] energy needs of an AI-driven economy.

_____________________

[1] PJM stands for Pennsylvania-New Jersey-Maryland Interconnection, which is a nonprofit organization that manages the electric grid for a region in the eastern United States. PJM is responsible for the safety, security, and reliability of the power transmission system

[2] The duck curve is a graph that shows the difference between electricity demand and solar energy generation over the course of a day. The curve’s shape resembles a duck, with a dip in the middle and a steep rise in the evening.

[3] ~5 years in context of power industry

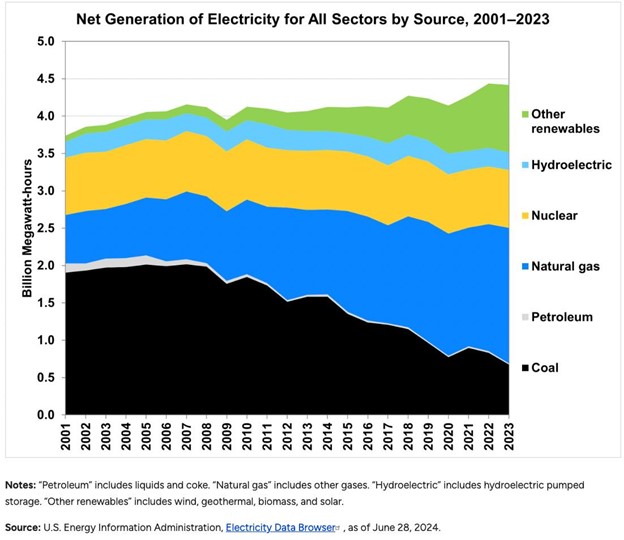

In the meantime, natural gas has emerged as the go-to fuel to meet demand quickly. Its scalability and reliability make it an attractive option, particularly in the U.S., where it has steadily grown to become the dominant source of electricity generation. However, this reliance comes at a cost. Natural gas is still a fossil fuel, and increasing its use locks the energy mix into a carbon-intensive resource pool. Meanwhile, in countries like China, coal remains the dominant energy source, with the nation prioritizing energy security and technological progress over environmental concerns. This reflects a notable trade-off. Should nations sacrifice sustainability goals in their pursuit of AI leadership?

Efficiency Gains and Jevons Paradox: The Hidden Catch

At first glance, improving energy efficiency seems like the golden ticket for managing AI’s growing power needs. Hardware advancements, such as Nvidia’s Blackwell GPUs, promising to be 25 times more energy-efficient than their predecessors, are celebrated as breakthroughs. Yet history shows that efficiency alone rarely reduces overall consumption. This is the crux of Jevons Paradox, first articulated by economist William Stanley Jevons in the 19th century: as efficiency increases, the cost of using a resource falls, which often leads to greater overall consumption.

This paradox has echoed across industries. During the Industrial Revolution, more efficient steam engines reduced the cost of coal use but spurred industrial expansion, increasing total coal consumption. Similarly, fuel-efficient cars enabled longer, cheaper journeys, resulting in more driving and higher aggregate fuel use. In AI, the same dynamic is unfolding. Innovations in hardware and algorithms drastically lower the energy cost per computation, but they also enable the development of larger models, faster inference, and greater adoption, driving energy consumption further.

Even as GPUs and other hardware become more energy-efficient, the construction of hyperscale data centers imposes physical and economic constraints. Recently, President Trump, in collaboration with OpenAI, SoftBank, and Oracle, announced the ambitious Stargate Initiative, a private-sector investment of up to $500 billion in artificial intelligence infrastructure, aiming to build 20 data centers across the United States. These hyperscale facilities, with massive fixed costs for land, construction, cooling, and power infrastructure, are built with an economic imperative: maximize utilization. As GPUs become more efficient, the cost per computation decreases, encouraging companies to increase the density of computational tasks within the same infrastructure. This ensures data centers often operate at or near their maximum power capacity. Efficiency gains, rather than reducing total energy consumption, drive computational intensity within existing infrastructure, further amplifying demand, a classic example of the rebound effect described by Jevons Paradox.

For decades, Moore’s Law described exponential improvements in computing, with transistor density doubling every two years. As Satya Nadella has pointed out, in the AI era, a new scaling law governs progress: AI performance doubling every six months. This rapid growth reflects not just advancements in model capabilities but also a dramatic rise in computational demands, particularly for inference and test-time compute. This new scaling trajectory highlights the accelerating resource intensity of AI adoption. OpenAI’s release of GPT-4o mini in 2024, a smaller, more efficient model, lowered costs and expanded access to businesses and consumers alike. Within a year, ChatGPT’s active user base doubled to over 200 million weekly users, driving query volumes to an estimated 3 billion per week. Despite reduced energy costs per query, the sheer scale of usage pushed total energy consumption to record levels, as efficiency gains were dwarfed by explosive growth in adoption. A similar story is unfolding with Chinese startup DeepSeek and its R1 model. Engineered for high performance with significantly lower energy consumption per query, R1 rapidly climbed to the #1 spot on Apple’s App Store in both the U.S. and China. Its energy-efficient architecture, combined with lower development costs, fueled immediate global adoption, garnering millions of downloads in under a week. DeepSeek’s meteoric rise vividly illustrates Jevons Paradox in action. As models become more efficient and accessible, demand skyrockets, leading to greater overall resource consumption despite individual efficiency gains.

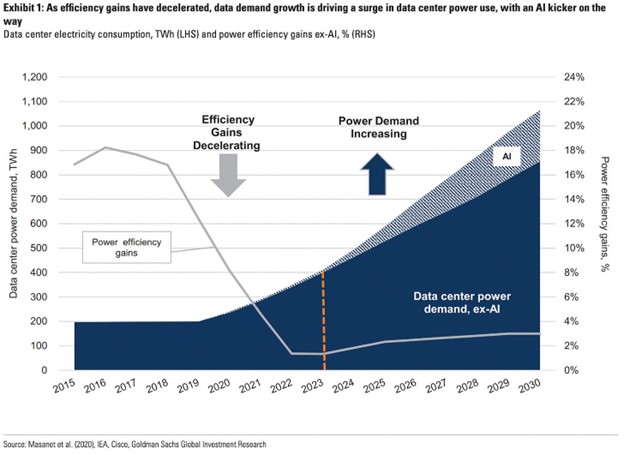

To add to this, system-wide efficiency of data centers is also plateauing. Advances in cooling, power usage effectiveness (PUE), and workload optimization once enabled data centers to manage the growth of computational workloads with relatively stable power demand between 2015 and 2019. However, as the Goldman Sachs report highlights, these gains are no longer sufficient to offset the explosive growth of AI workloads. System-level efficiency improvements are also failing to keep pace.

Efficiency, while critical, has lowered the barrier to entry for AI systems, fueling widespread adoption and embedding AI into everyday life. This same efficiency has also enabled the creation and deployment of increasingly complex models. As a result, this dynamic ensures that efficiency gains, rather than curbing energy demand, accelerate its expansion across industries and applications, proving Jevons Paradox true once again.

Existing Actions and Gaps: Where We Are Now

These mounting pressures on energy systems haven’t gone unnoticed. Both industries and governments have begun to respond, laying the groundwork for a more resilient energy future. Tech giants’ investments in innovative technologies, grid modernization, and alternative energy sources represent the first steps toward addressing growing energy demands.

Google, for instance, has partnered with Fervo Energy to launch an advanced geothermal energy project in Nevada, aiming to tap into a reliable and renewable energy source. Similarly, Microsoft has signed a 20-year agreement with Constellation Energy to restart Unit 1 of the Three Mile Island nuclear plant in Pennsylvania, securing a stable, carbon-free energy supply for its data centers. Amazon Web Services is also investing $11 billion in Georgia to build out infrastructure capable of supporting AI workloads, reflecting the massive capital investments required to sustain AI-driven technologies.

On the policy side, governments are beginning to engage with the challenges posed by AI’s energy footprint. In the U.S., the Department of Energy is planning to use AI as a critical tool for modernizing the grid, with initiatives aimed at improving planning, permitting, and operations to enhance energy efficiency and reliability. Across the Atlantic, the European Union’s AI Act emphasizes energy efficiency standards and transparency in energy reporting for high-risk AI systems, signaling a growing recognition of AI’s environmental impact.

While meaningful, these measures are not without limitations. As mentioned before, geothermal and nuclear projects, like renewable energy installations, require years to scale and deploy effectively. Similarly, grid upgrades and modernizations remain incremental, creating a growing mismatch between the pace of AI adoption and the evolution of our energy infrastructure. The rapid growth of AI-driven technologies continues to outpace the rate at which energy systems can adapt, underscoring the need for faster and more coordinated action.

Still, these efforts reflect an important start, an acknowledgment of where existing energy systems are at. This is only one part of a much larger conversation. The relationship between energy and AI is deeply intertwined, touching on everything from geopolitical dynamics and resource dependencies to energy market volatility and global supply chains. Understanding these complexities will be critical as we navigate not just the technical challenges but also the broader societal and economic trade-offs that lie ahead.

>Article by

Aishwarya Mahesh, Energy and Environmental Sales Analyst at ADM Investor Services – Houston Division

The data, comments and/or opinions contained herein are provided solely for informational purposes by ADM Investor Services, Inc. (“ADMIS”) and in no way should be construed to be data, comments or opinions of the Archer Daniels Midland Company. This report includes information from sources believed to be reliable and accurate as of the date of this publication, but no independent verification has been made and we do not guarantee its accuracy or completeness. Opinions expressed are subject to change without notice. This report should not be construed as a request to engage in any transaction involving the purchase or sale of a futures contract and/or commodity option thereon. The risk of loss in trading futures contracts or commodity options can be substantial, and investors should carefully consider the inherent risks of such an investment in light of their financial condition. Any reproduction or retransmission of this report without the express written consent of ADMIS is strictly prohibited. Again, the data, comments and/or opinions contained herein are provided by ADMIS and NOT the Archer Daniels Midland Company. Copyright (c) ADM Investor Services, Inc.

Risk Warning: Investments in Equities, Contracts for Difference (CFDs) in any instrument, Futures, Options, Derivatives and Foreign Exchange can fluctuate in value. Investors should therefore be aware that they may not realise the initial amount invested and may incur additional liabilities. These investments may be subject to above average financial risk of loss. Investors should consider their financial circumstances, investment experience and if it is appropriate to invest. If necessary, seek independent financial advice.

ADM Investor Services International Limited, registered in England No. 2547805, is authorised and regulated by the Financial Conduct Authority [FRN 148474] and is a member of the London Stock Exchange. Registered office: 3rd Floor, The Minster Building, 21 Mincing Lane, London EC3R 7AG.

A subsidiary of Archer Daniels Midland Company.

© 2021 ADM Investor Services International Limited.

Futures and options trading involve significant risk of loss and may not be suitable for everyone. Therefore, carefully consider whether such trading is suitable for you in light of your financial condition. The information and comments contained herein is provided by ADMIS and in no way should be construed to be information provided by ADM. The author of this report did not have a financial interest in any of the contracts discussed in this report at the time the report was prepared. The information provided is designed to assist in your analysis and evaluation of the futures and options markets. However, any decisions you may make to buy, sell or hold a futures or options position on such research are entirely your own and not in any way deemed to be endorsed by or attributed to ADMIS. Copyright ADM Investor Services, Inc.